GoBackInTime

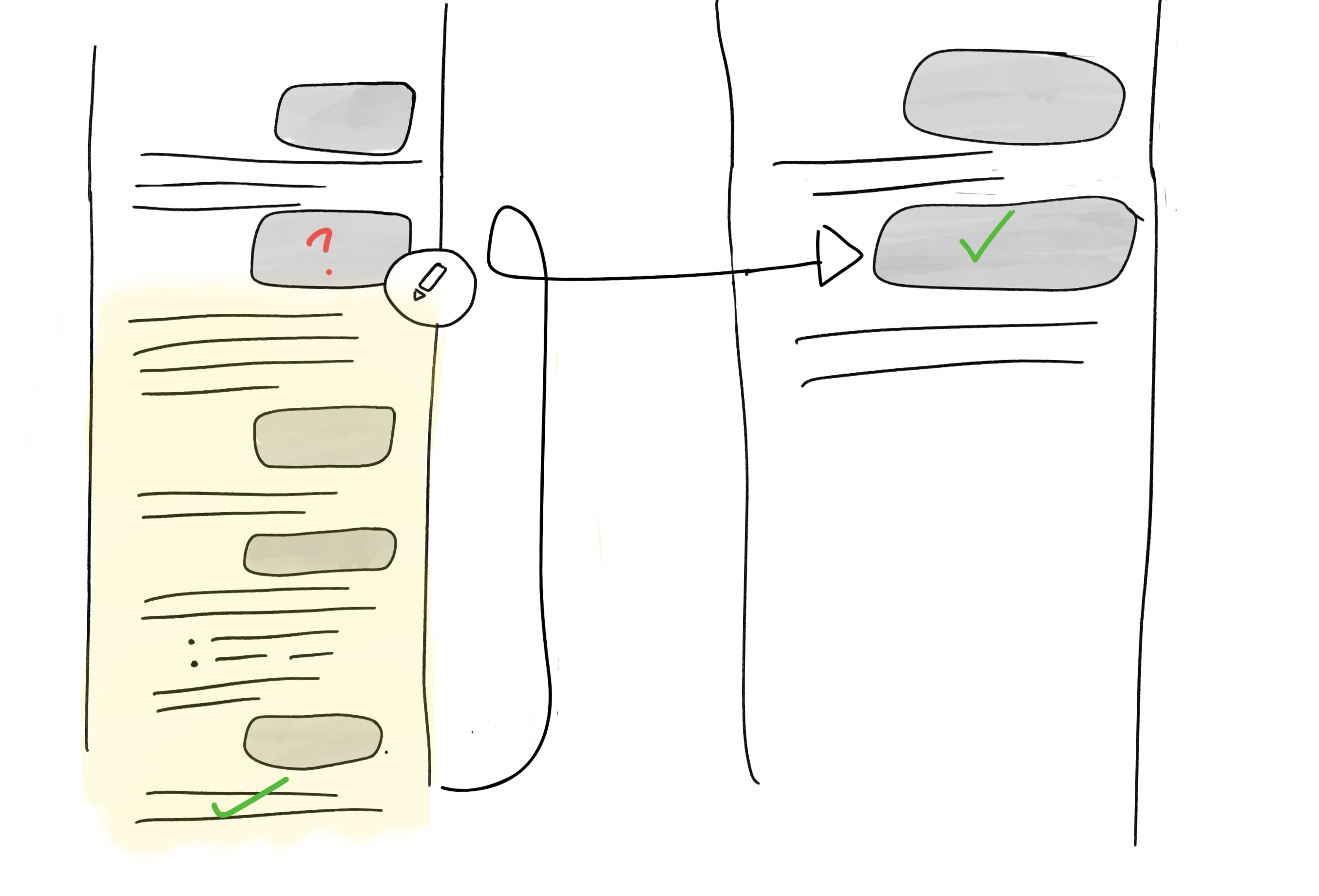

When working with LLMs, I often end up on “side quests”—back-and-forths as I try to figure something out with the AI’s help, or how to phrase something in a way the AI “understands”. This risks distracting the AI with the details of the side conversation, rather than the main substance. Here’s a simple technique to prevent the AI from getting distracted: when you get a response that you don’t like, instead of having an extended dialogue to clarify it**, go back end edit the previous message**, adding clarifications or caveats. If the AI still makes a mistake, add that as an additional condition to your original message.

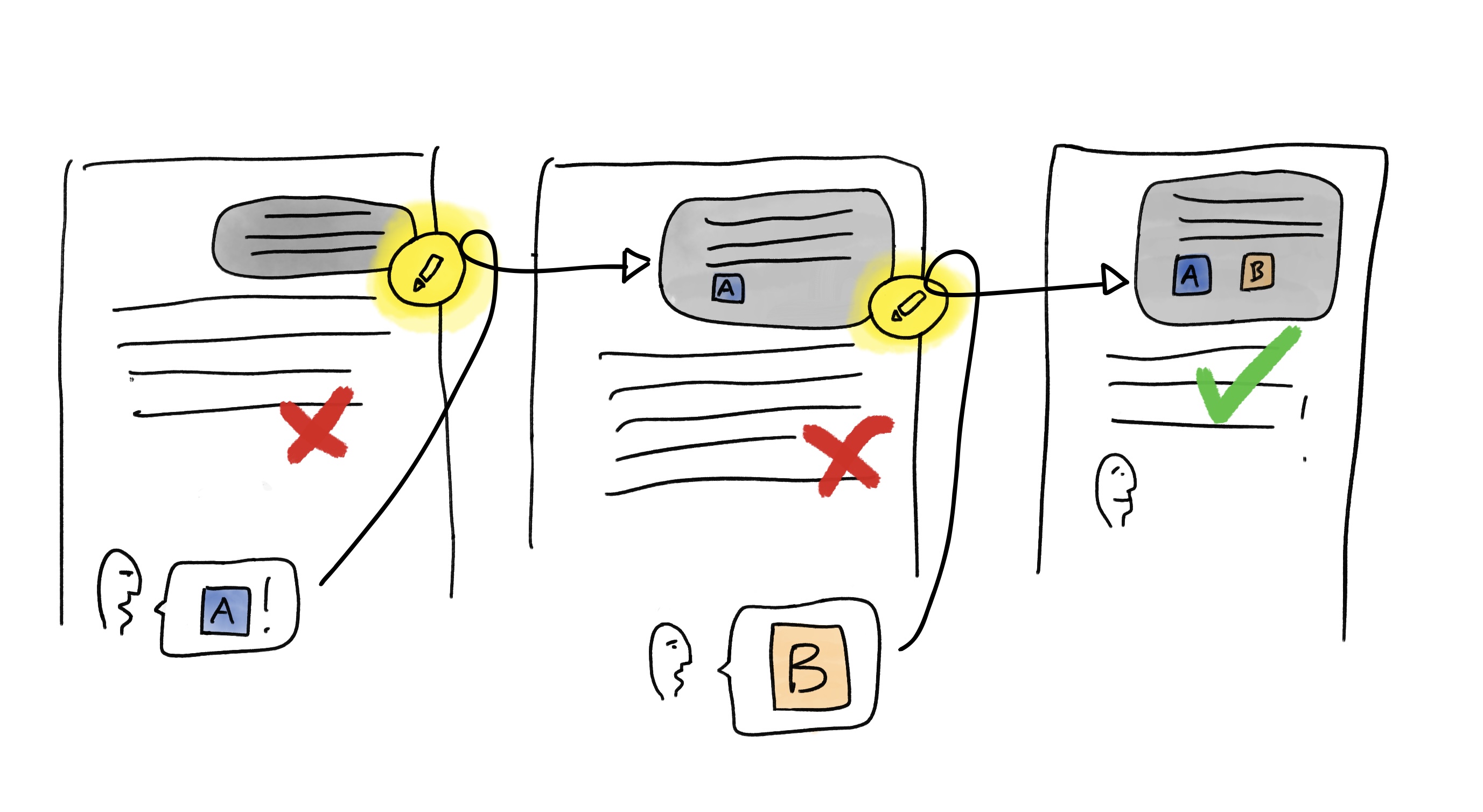

Alternately, if you do end up on a tangent that yields an interesting insight, scroll back to the point in the conversation where you wish you’d had that insight, and restart your conversation from there—edit the first side-tracking message to continue the conversation with your newfound knowledge.